AI Slop vs Constrained UI: Why Most Generative Interfaces Fail

TL;DR

AI can generate structured interface layouts and assemble component hierarchies from natural language prompts. When generation is unconstrained, the output diverges from design systems, lacks determinism, and requires downstream refactoring before deployment. Production-grade generative UI requires predefined component registries, validated schemas, and explicit architectural constraints, which is the model implemented by Puck and its constrained generation layer, Puck AI.

Introduction

AI systems can now generate complete interface layouts from natural language prompts. Tasks that previously required manual section planning, component selection, and layout composition can now start from a single instruction.

Yet when these generated interfaces are evaluated against real production standards, limitations become clear. Outputs often conflict with established design systems, introduce structural inconsistencies, and produce layouts that require refactoring before integration.

What appears efficient at the prompt layer frequently shifts complexity downstream into engineering workflows. This gap between demo output and deployable software has led to growing skepticism around generative UI, sometimes informally labeled as “AI slop” to describe output that appears complete but fails architectural validation.

In this article, we will examine where AI meaningfully supports interface generation, where it breaks down in production environments, and why constrained, schema-driven systems are essential for making generative UI operational at scale.

What AI Is Actually Good At in UI Workflows

Before evaluating the limitations of generative UI, it is important to isolate where it provides measurable value. AI is effective at accelerating early-stage interface assembly, particularly when the objective is to convert high-level intent into an initial layout draft.

Consider a product team building a new SaaS landing page. A prompt such as “Create a landing page for an AI analytics platform with a hero section, feature highlights, pricing tiers, and customer testimonials” can reliably produce a logically organized page structure within seconds. The output may not be production-ready, but it establishes a usable structural baseline.

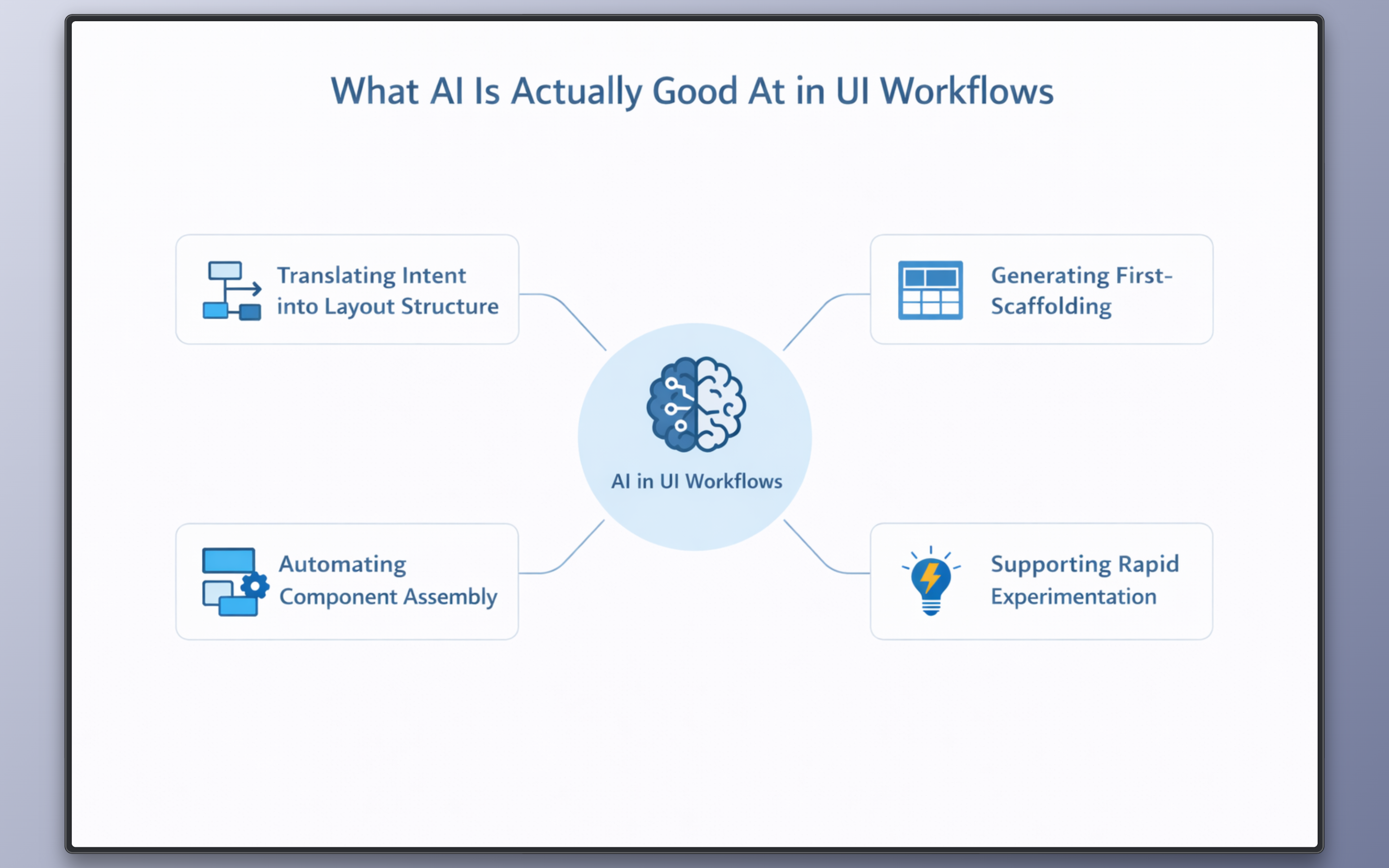

AI performs well in the following areas:

- Translating Intent into Layout Structure: AI can map abstract, well-known requirements into recognizable interface patterns. For example, it understands that a landing page typically includes a hero, feature highlights, social proof, and a call to action. This reduces the cognitive load of structuring the page from scratch.

- Generating First-Pass Scaffolding: AI can assemble a preliminary hierarchy of sections and placeholder content. This enables teams to visualize information architecture quickly before investing time in refinement.

- Automating Repetitive Component Assembly: When working with predefined components, AI can configure repeated structures such as feature cards, pricing tiers, or testimonial blocks with consistent prop patterns. This is particularly useful in systems with modular design libraries.

- Supporting Rapid Experimentation: AI allows teams to generate multiple layout variations in minutes, enabling faster exploration of structural alternatives without manual reconfiguration.

AI is therefore effective at structural acceleration, particularly in early-stage development where system rules, design boundaries, and composition patterns are still being defined.

In established product environments, however, structural acceleration must operate within existing architectural constraints to remain viable.

Where Unbounded UI Generation Breaks Down

Many unbounded generation tools produce raw code that must be reviewed, integrated, and redeployed before it can be used in production. Even when the output appears complete, it is not directly executable within an existing system. This shifts responsibility to engineering teams and introduces friction into workflows that are intended to be self-service.

For non-technical users such as marketers or content authors, this model is impractical. Page updates require developer involvement, slowing content publishing and limiting autonomy. Instead of enabling cross-functional workflows, generative UI becomes a developer-only tool.

Below are a few additional architectural limitations to consider:

- Design System Violations: Unbounded generation does not inherently respect spacing scales, typography tokens, or component composition rules. It may introduce arbitrary margins, inconsistent heading hierarchies, or layout patterns that are not part of the approved system. Even if visually acceptable, these deviations fragment the design language and undermine maintainability.

- Inconsistent Component Usage: In systems with established component libraries, specific components are intended for specific contexts. Free-form generation may misuse primitives, duplicate existing abstractions, or bypass higher-level components entirely. This creates parallel patterns that increase technical debt and weaken reuse.

- Non-Deterministic Outputs: Identical prompts can yield structurally different layouts across executions. In production environments, this lack of determinism complicates testing, review processes, and content governance. Predictability is a requirement for scalable systems.

- Brand and Compliance Drift: Without embedded context, models default to generic language and layout conventions. They lack awareness of regulatory constraints, accessibility standards, and brand-specific positioning. This introduces risk in industries where messaging and structure must adhere to policy.

- Output Requiring Engineering Cleanup: Generated code or markup frequently requires normalization before integration. Engineers must refactor styles, align components with existing abstractions, and correct structural inconsistencies. The perceived acceleration at generation time is offset by downstream rework.

Free-form UI generation, therefore, conflicts with production architecture. Systems designed for reliability, reuse, and governance require structured constraints, not unconstrained synthesis.

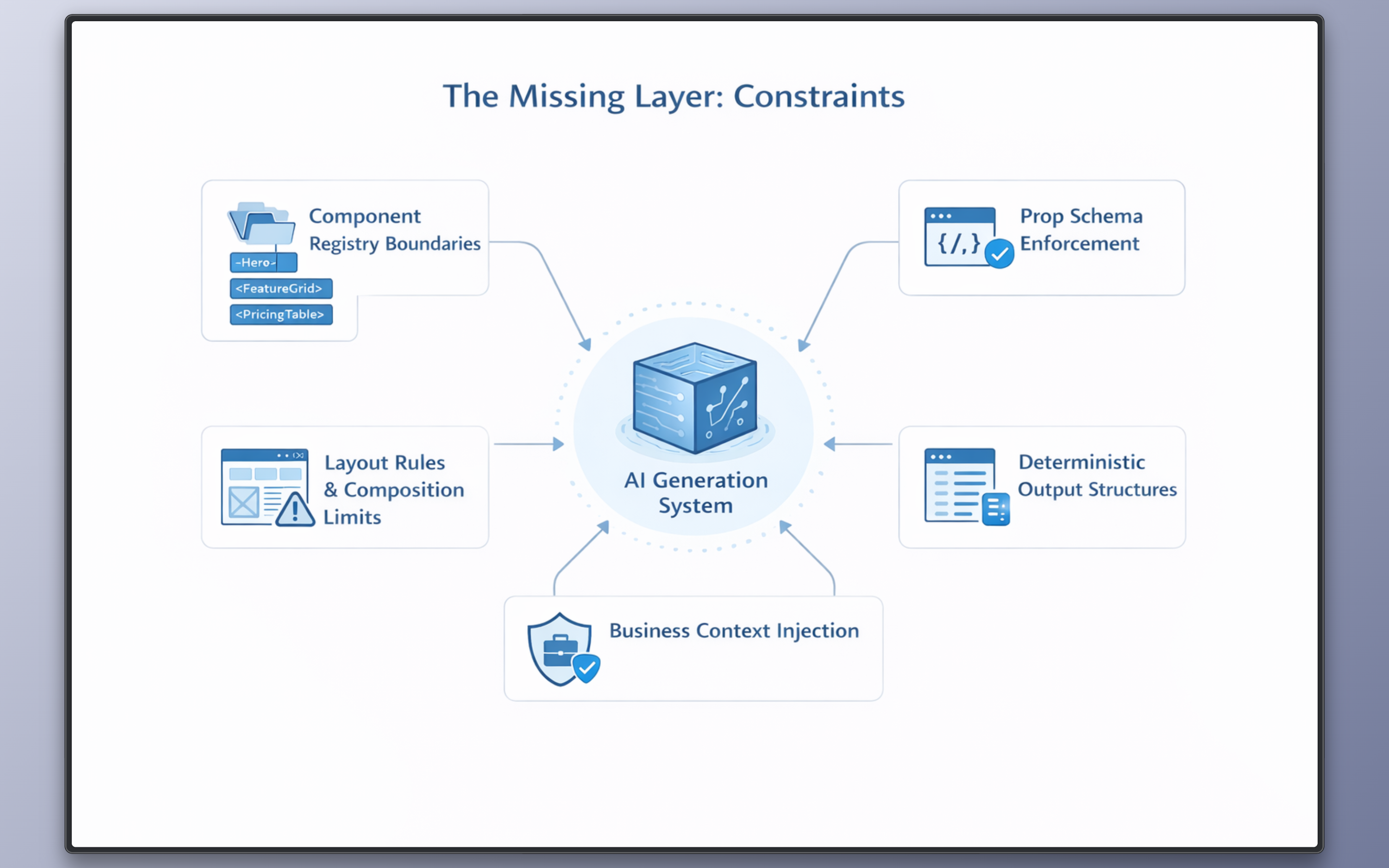

The Missing Layer: Constraints

In this context, constraints define what can be generated, how it can be configured, how components can be composed, and how the result is represented at runtime. In real systems, those constraints are implemented through concrete mechanisms like component registry boundaries, schema validation, composition rules, and structured runtime output.

Component registry boundaries limit generation to approved React components. Instead of synthesizing arbitrary markup, the model assembles interfaces from predefined primitives such as Hero, FeatureGrid, or PricingTable. Prop schema enforcement validates inputs against typed definitions, ensuring that required fields, enumerations, and data shapes conform to system expectations. For example, a pricing component may require a structured array of tiers with defined attributes rather than free-form content.

Layout rules and composition limits restrict how components can be nested, preventing structurally invalid trees. Business context injection embeds brand, regulatory, or domain constraints directly into the generation process. Deterministic output structures, typically expressed as structured JSON, ensure predictable rendering and traceable state transitions across environments.

How Puck AI Implements Architectural Constraints

With Puck and Puck AI, the constraints we discussed above are implemented and enforced at the system level rather than inferred at prompt time.

Puck AI is a generative UI layer built on top of Puck’s React visual editor that enables page generation within predefined architectural boundaries.

It operates by assembling interfaces exclusively from registered React components instead of generating code. When a user requests something like “a landing page for an AI analytics platform,” the system composes that page from the components already configured in the application. The result is a deterministic component tree interpreted by Puck at runtime, keeping everything aligned with the existing design system and application logic.

In this workflow, generation is an orchestration process over defined primitives. The AI behavior is shaped by the editor configuration and the components supplied by the development team.

Puck AI Characteristics

Puck AI demonstrates bounded generation through the following characteristics:

- Generation from Registered React Components: The AI selects and composes only those components explicitly registered in the Puck configuration. If the system exposes

Hero,FeatureGrid, andPricingTable, those are the only structural primitives available. No new layout elements are introduced outside the approved library. - Structured Page Schema Output: The result of the generation is a structured page definition that maps component types to their configured props and hierarchical placement. This schema is interpreted by Puck at runtime to render the interface. The AI does not directly control rendering logic.

- Business Context and Brand Rules: Configuration layers allow teams to define tone, domain context, and structural expectations. These parameters influence how sections are assembled and how content fields are populated, ensuring alignment with product positioning and organizational standards.

- Design System Preservation: Because rendering occurs through predefined components, spacing, typography, and layout behavior remain governed by the existing design system. Visual consistency is enforced by the component implementation, not by prompt phrasing.

- Deterministic Behavior via Configuration: Through controlled configuration of available components, fields, and generation parameters, teams can narrow variability in output. The same structural intent produces predictable component trees aligned with system rules.

Decision Framework: When AI Should and Shouldn’t Generate UI

| Scenario | AI Should Generate UI | AI Should Not Generate UI |

|---|---|---|

| Component Model | When the system exposes a predefined component registry and generation is limited to those primitives | When the model is free to create arbitrary markup or new structural abstractions |

| Design System Enforcement | When rendering is handled by approved components that encapsulate spacing, typography, and layout rules | When styling and layout are generated directly without enforcement of design tokens or system constraints |

| Output Format | When the result is a validated, structured schema interpreted by the application runtime | When the output consists of raw HTML, inline styles, or loosely structured code |

| Context and Governance | When brand tone, business rules, and domain constraints are injected into the generation layer | When generation relies solely on prompt phrasing without embedded organizational context |

| Determinism and Stability | When output variability is controlled through configuration and predictable component trees are required | When identical prompts can yield structurally divergent results that complicate testing and review |

| Production Readiness | When generated output can be rendered directly within the existing architecture without refactoring | When engineering intervention is required to normalize, restructure, or harden the generated interface |

Final Verdict

Generative UI is effective when it operates within defined architectural boundaries and established component systems. When generation bypasses those constraints, inconsistency and downstream rework become unavoidable. Production-grade outcomes require schema enforcement, controlled composition, and system-level governance.

To see this model in practice, explore Puck for structured visual editing and try Puck AI for constrained page generation workflows. Puck AI is currently available in beta for teams evaluating controlled generative UI in production environments.

Learn more about Puck

If you’re interested in learning more about Puck, check out the demo or read the docs. If you like what you see, please give us a star on GitHub to help others find Puck too!